In today’s digital landscape, where the internet serves as a vast marketplace of ideas, content moderation plays a pivotal role in ensuring a safe, inclusive, and respectful online environment. The internet, once hailed as a platform for free expression, has evolved into a space where billions of users interact, share information, and express their thoughts. However, with this freedom comes responsibility, and striking a balance between unrestricted expression and protecting users from harmful or inappropriate content is a challenge that content moderators face every day.

Defining Content Moderation:

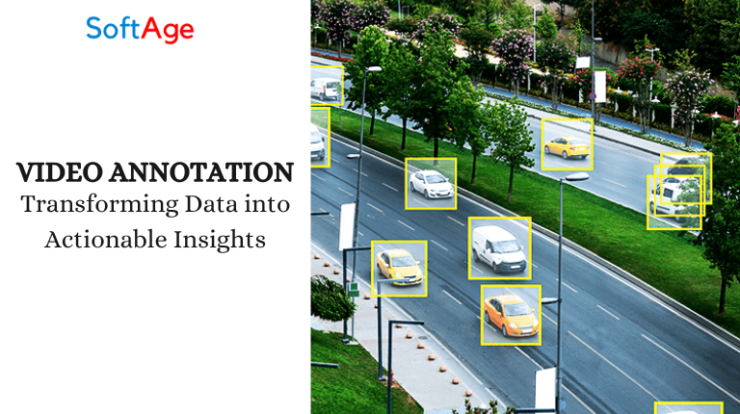

Content moderation refers to the process of monitoring and regulating user-generated content on digital platforms. This can include text, images, videos, and other forms of media shared on social media, forums, websites, and applications. The primary objective of content moderation is to prevent the dissemination of harmful, illegal, or inappropriate content, thereby fostering a positive online experience for users.

The Challenges of Content Moderation:

Scale and Volume: The sheer volume of content uploaded every second poses a significant challenge for content moderation teams. Platforms like Facebook, Twitter, and YouTube receive millions of posts daily, making it impossible to manually review each piece of content.

Contextual Ambiguity: Determining the context of a post can be complex. A harmless phrase in one context might be offensive in another. Content moderation teams must consider cultural nuances, sarcasm, and colloquial language to avoid misinterpretations.

Emergence of New Content: The internet is constantly evolving, giving rise to new forms of content such as deep fakes and memes that blur the line between reality and fiction. Moderators need to adapt to these emerging challenges promptly.

Preserving Freedom of Speech: Striking a balance between curbing harmful content and upholding freedom of speech is a constant dilemma. Overzealous moderation may lead to censorship, hindering open discourse, while lax moderation can result in the spread of misinformation and hate speech.

Content Moderation Strategies:

Automated Filters and AI: Artificial Intelligence (AI) and machine learning algorithms are employed to automatically flag potentially problematic content. While these tools can process large volumes of data, they are not flawless and can sometimes miss context.

Human Moderators: Human moderators play a crucial role in content moderation, especially in deciphering context and understanding cultural nuances. Their expertise is invaluable in handling nuanced cases that automated systems might misinterpret.

Community Reporting: Many platforms encourage users to report inappropriate content. Community reporting engages users in the moderation process, allowing them to actively contribute to a safer online environment.

Ethical Considerations in Content Moderation:

Content moderation raises ethical questions about who decides what is acceptable and what is not. Platforms must establish clear guidelines and policies, ensuring transparency in their moderation processes. Additionally, addressing biases within moderation systems is crucial to prevent unfair treatment of certain communities or opinions.

The Future of Content Moderation:

The future of content moderation lies in continued collaboration between technology and human expertise. Improvements in AI algorithms, combined with the discernment of human moderators, will enhance the accuracy of content moderation. Additionally, educating users about responsible online behavior and critical thinking can mitigate the spread of harmful content.

Conclusion

In conclusion, content moderation is a complex and multifaceted challenge in the digital age. Striking the right balance between freedom of speech and responsible regulation is essential to create a safe online environment for all users. As technology evolves, so too must our approaches to content moderation, ensuring that the internet remains a space for open dialogue, creativity, and positive interactions while safeguarding against the potential harm that unregulated content can cause.